Awhile ago, I was confused by repetitive 404 “Not Found” errors in my server logs. The 404 requests look like someone is typing out various words, a few letters at a time. This post shows what these weird 404s look like from the server’s perspective, and then goes on to explain why they happen and why there is no practical way of preventing them.

Footprints in the sand

Here is an example of the 404 requests that we’re dealing with in this post:

http://example.com/word

http://example.com/wordpress-

http://example.com/wordpress-themes-

http://example.com/wordpress-themes-in-

http://example.com/wordpress-themes-in-dep

.

.

.After a few moments of head scratching, I realized that this footprint is left by Google’s “predictive URL” service. You know the one, where Google tries to “guess” what you are looking for as you type in the address bar. In this case, the requests were coming from Chrome on Android. Here are a couple of complete log entries:

TIME: September 6th 2014, 04:26am

*404: http://example.com/word

SITE: http://example.com/

REFERRER: undefined

QUERY STRING: undefined

REMOTE ADDRESS: 123.456.789

REMOTE IDENTITY: undefined

PROXY ADDRESS: 123.456.789

HOST: 123.456.789.example.com

USER AGENT: Mozilla/5.0 (Linux; Android 4.2.1; Nexus 7 Build/JOP40D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19

METHOD: GET

TIME: September 6th 2014, 04:27am

*404: http://example.com/wordpress-themes-

SITE: http://example.com/

REFERRER: undefined

QUERY STRING: undefined

REMOTE ADDRESS: 123.456.789

REMOTE IDENTITY: undefined

PROXY ADDRESS: 123.456.789

HOST: 123.456.789.example.com

USER AGENT: Mozilla/5.0 (Linux; Android 4.2.1; Nexus 7 Build/JOP40D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19

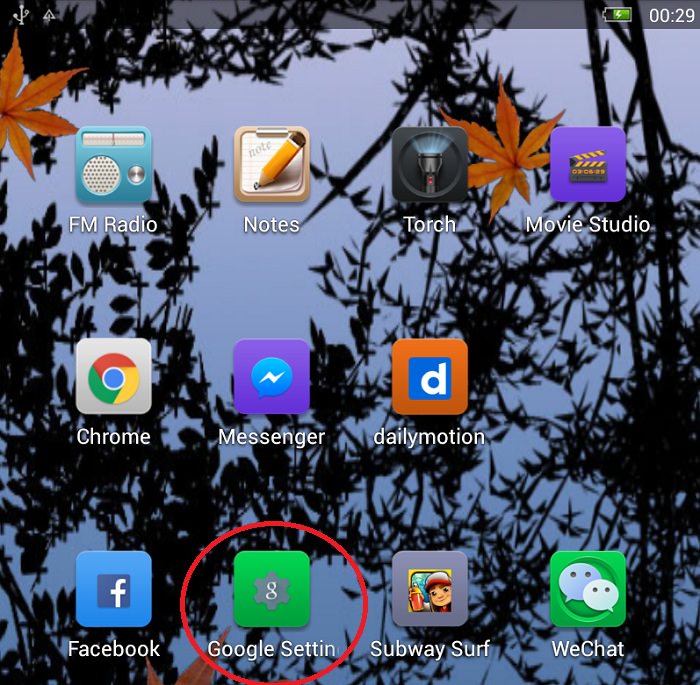

METHOD: GETSo after testing this, I confirmed that it happens when the “Google Predictive URLs” (aka “Search and URL Suggestions”) setting in Chrome is enabled. Just playing around for a few minutes, I managed to chalk up nearly a hundred “predictive” 404 errors on my server. I can’t even fathom the cumulative amount of bandwidth and resources this “feature” is wasting. Think millions and millions of devices requesting stacks of these requests every minute.. the actual numbers no-doubt would be mind-boggling.

Notice that they’re going with full on GET requests for each predictive request, so they’re grabbing the entire page load when a simple, less-intensive HEAD request or similar would suffice. Would be interesting to know the reasoning behind this decision.

In any case, I’m not sure if the whole predictive thing is still a feature that is included with Chrome. Or if other browsers/devices provide similar functionality. I checked again before writing this post, and the setting seems to be there, but I was unable to trigger any further 404s during my followup round of experimentation.

Prevention?

Unfortunately, there is not a lot that can be done from the server’s perspective to prevent/block/control these sorts of redundant queries. Ideally, someone could write a script that serves a symmetrical response to the predictive requests. Such that the nearest matched resource will be served after the second or third consecutive 404 from Chrome et al. Short of something like that, there would be no way of circumventing without potentially damaging a site’s SEO.

From the user’s perspective, the predictive requests can be stopped by simply disabling the browser setting, but I think it’s safe to say that 99.9% of the general population has literally no clue that any such thing is even happening.

So until Google removes the feature from their browser (if they haven’t already — again, I’m not sure), there really is no way to disable from the server, sans whitelisting every URL on your domain. Probably not recommended for a variety of reasons.. it would just be overkill. Maybe just chalk it up as an additional operation cost, like a tax to support predictive search functionality.

No Comments